Custom Servers

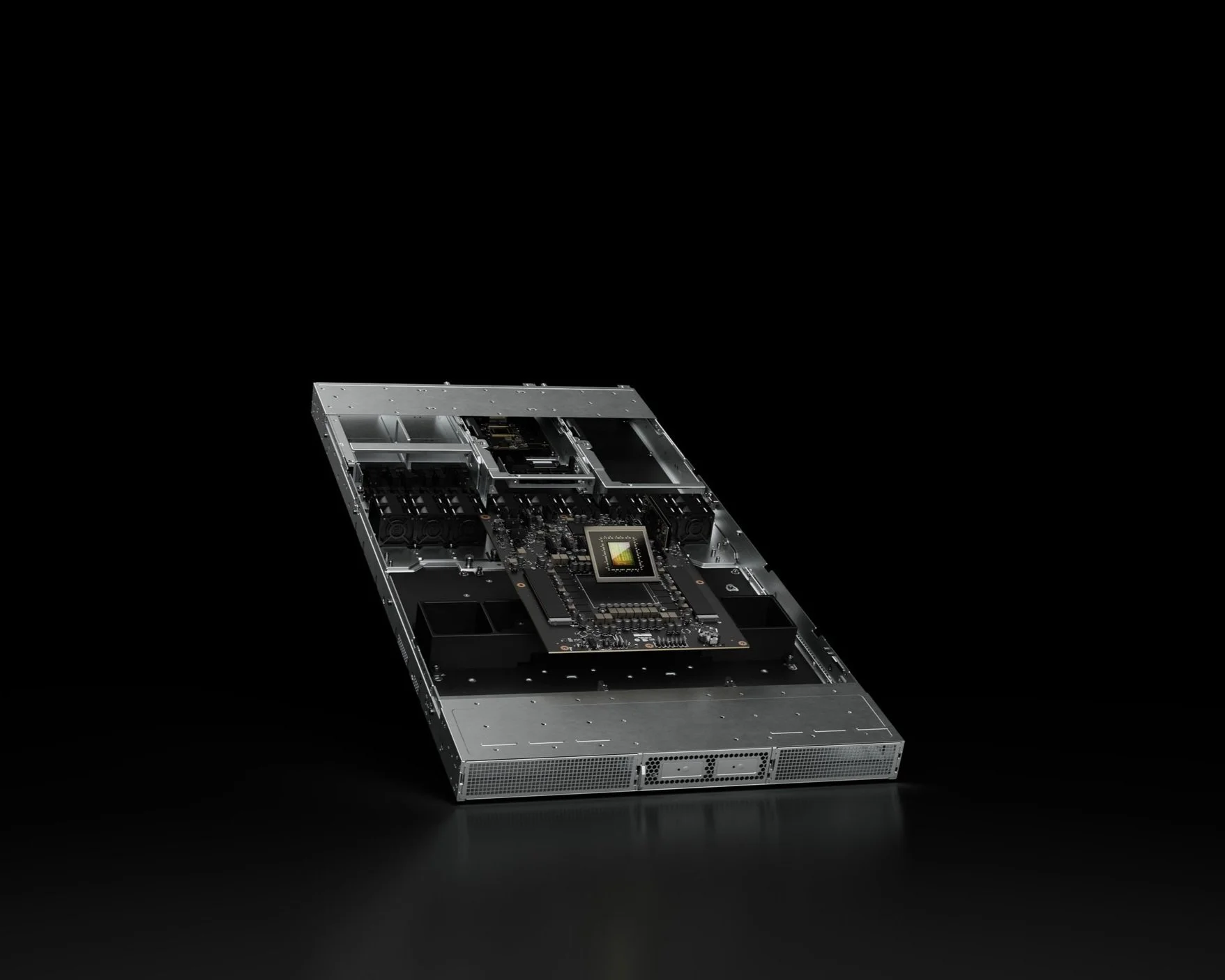

Purpose-Built Infrastructure for Compute-Intensive Workloads

From scientific simulations and AI model training to real-time inference and large-scale data processing—off-the-shelf servers aren’t built for your unique performance demands. Our custom-built HPC and AI servers are engineered to deliver consistent power, scalability, and reliability for the most demanding applications.

Designed for Heavy Lifting

We don’t just build servers—we design solutions that are purpose-built for the challenges you face. Every system we deliver is the result of a collaborative process, tailored to your specific workflow, data environment, and long-term performance goals. From selecting the right compute architecture to optimizing for thermal efficiency, scalability, and uptime, we focus on delivering hardware that accelerates your work—not limits it.

Whether you're deploying in a rackmount data center, an edge compute environment, or a research lab with evolving needs, our servers are engineered for your real-world demands. We don’t rely on generic templates or one-size-fits-all configurations. Instead, we take the time to understand your applications, workloads, and integration requirements—so that every system we ship is ready to perform from day one.

Built for Your Industry

No two workloads are the same—which is why we build servers tailored to the specific demands of your field. Whether you’re running large-scale simulations, training AI models, or processing massive datasets, we design systems that align with the infrastructure, tools, and performance needs that power your organization.

-

Building advanced AI systems requires more than compute power—it demands architecture tailored to evolving research. Whether you’re training transformer models, deploying real-time inference pipelines, or developing multi-agent frameworks, our servers provide the reliability and performance foundation for deep learning at scale. We support a wide range of frameworks and model types, from vision and NLP to reinforcement and generative learning.

-

Heavy 3D modeling, BIM coordination, photorealistic rendering, and simulation workflows are standard in AEC firms. Our systems are optimized for software like Revit, AutoCAD, Navisworks, Rhino, and Enscape—delivering the speed and responsiveness needed for large architectural models, real-time collaboration, and iterative design. From early concept visualization to final construction documentation, our servers handle complex files and parallel workflows with ease.

-

Engineering teams in automotive and aerospace rely on simulation and modeling at massive scales. We build systems that support crash simulation, CFD, multiphysics modeling, and control system development using tools like ANSYS, LS-DYNA, MATLAB, and Simulink. Whether you're designing next-gen EVs or testing flight dynamics, our servers deliver the speed, accuracy, and uptime required for innovation in motion.

-

Data science teams need systems capable of ingesting, processing, and analyzing massive datasets without delay. Our servers support every stage of the pipeline—from ETL and feature engineering to model deployment and dashboarding. Built to run tools like R, Pandas, Dask, and Spark, they are ideal for enterprise analytics, academic research, and operational AI, supporting concurrent users and complex workflows without compromise.

-

Financial modeling, risk analysis, and algorithmic trading demand fast, deterministic compute. We design systems for quants and analysts running simulations, Monte Carlo analysis, machine learning models, and data-intensive forecasting. With support for low-latency networking, high-throughput storage, and parallel processing, our servers are ideal for hedge funds, fintech startups, and enterprise data science teams.

-

Enterprise game studios require compute infrastructure that can keep pace with the scale and complexity of modern production. Our servers are built to accelerate workflows across large-scale world building, real-time rendering, procedural content generation, and AI-driven gameplay. Whether you’re developing in Unreal Engine, Unity, or a proprietary engine, we support intensive tasks like simulation, cinematic previsualization, automated build testing, and backend services for multiplayer environments. With centralized storage and remote-ready performance, our systems help distributed teams iterate faster and deliver at the highest quality—without infrastructure slowing them down.

-

Universities, labs, and research institutions require versatile compute infrastructure that can serve diverse users and workloads. Our systems support shared environments, departmental clusters, and faculty-specific projects with ease. With configurations designed for GPU acceleration, virtualization, and container orchestration, we help institutions run everything from course environments to intensive research simulations.

-

Modern legal firms and forensic teams need infrastructure capable of processing and analyzing vast quantities of documents and communications. Our servers support NLP, machine learning-based review tools, and secure high-volume storage for sensitive case data. From early data assessment to trial support, we provide scalable systems for e-discovery platforms and litigation tech environments.

-

Life sciences require infrastructure that can handle complex biological data at scale. From genomics and molecular dynamics to AI-enhanced diagnostics and medical imaging, our servers support tools like GATK, Cryo-EM, TensorFlow, and BioPython. Whether you're running simulations or training models on clinical datasets, we deliver systems built to meet regulatory, storage, and compute challenges unique to this sector.

-

For data scientists and ML engineers, rapid iteration is key. Our systems are purpose-built to support ML frameworks like PyTorch, TensorFlow, and JAX, with performance tuned for both training and inference. From early experimentation to production deployment, we help accelerate model development across tabular data, time series, computer vision, and natural language pipelines—whether running locally, in clusters, or hybrid environments.

-

Modern manufacturing and product design require high-performance computing for CAD/CAM workflows, digital prototyping, and simulation. Whether you're working with SolidWorks, Siemens NX, or CATIA, our systems accelerate FEA, CFD, and generative design while supporting real-time rendering and revision cycles. Ideal for teams in mechanical, electrical, or industrial design, our servers offer reliable, scalable power for the entire product lifecycle.

-

Creative studios depend on consistent speed and rendering accuracy. Our servers are built for high-resolution video editing, VFX, 3D animation, virtual production, and real-time rendering. From DaVinci Resolve and Houdini to Unreal Engine and Maya, we support media pipelines that involve massive assets, collaborative workflows, and GPU-accelerated rendering—delivering predictable performance even under tight deadlines.

-

Exploration and energy production require massive compute power for seismic analysis, reservoir simulation, and subsurface modeling. Our systems support applications like Petrel, Paradigm, and Gocad, with high-bandwidth storage and multi-GPU configurations tailored for large-scale geospatial and time-series datasets. Ideal for upstream R&D and field-level analytics in oil, gas, and renewable sectors.

-

Real-time personalization, demand forecasting, and recommendation systems require reliable, scalable compute. Our infrastructure helps e-commerce platforms run AI models for customer insights, optimize inventory planning, and deliver targeted experiences. From backend analytics to edge inference at retail locations, our servers support end-to-end retail intelligence.

-

Researchers need compute environments built for precision and throughput. Our HPC systems are designed for numerically intensive simulations, modeling, and data analysis in fields like physics, chemistry, genomics, and climatology. With support for MPI, GPU acceleration, and parallel file systems, our infrastructure delivers the raw power and efficiency needed for high-volume, compute-bound workloads across academic and industrial research labs.

-

City planning agencies and infrastructure firms leverage AI and simulation to model traffic, energy usage, and environmental impact. Our systems enable sensor data ingestion, spatial analysis, digital twin modeling, and long-term forecasting—all essential for managing sustainable, responsive urban environments. We support workflows in GIS, transportation modeling, and IoT integration at city-wide scale.

-

Telecom operators rely on powerful systems for network simulation, fraud detection, AI-enhanced routing, and 5G deployment planning. Our servers are engineered to run data-intensive software stacks and time-sensitive inference models. Whether in core networks or at the edge, we help telcos and ISPs support infrastructure analytics, traffic optimization, and predictive maintenance.

What goes into a Custom Server

High-performance servers require more than raw specs—they need balanced architecture, proper thermal design, and tailored integration. Here’s what we focus on:

-

1U to 4U rackmount options, edge systems, or modular clusters to suit your deployment needs.

-

Often overlooked, these ensure stability, connectivity, and upgrade flexibility. We use enterprise-grade parts for maximum uptime and expandability.

-

Your server’s CPU configuration defines its raw computational throughput and memory bandwidth. For parallel processing, simulations, or multi-threaded tasks, dual-socket (2P) servers are ideal—offering more cores, higher memory capacity, and better I/O scalability. Single-socket (1P) systems can still deliver powerful performance for many AI and analytics workloads while reducing power and cost. We help you choose the right CPU platform—AMD EPYC, Intel Xeon, or emerging architectures—based on core count, clock speed, and cache requirements.

-

Multi-GPU configurations for AI training, inference, or CUDA-accelerated workloads.

-

ECC DDR5 memory for data integrity, available in large configurations for memory-hungry applications.

-

Fast NVMe storage, optional RAID, and hot-swappable drives for high availability and throughput.

-

10/25/100 GbE and Infiniband support for low-latency, high-bandwidth data movement.

-

Item The motherboard is more than just a base—it determines your system’s expandability, I/O capabilities, and compatibility with accelerators like GPUs or FPGAs. We select motherboards purpose-built for server environments, with support for PCIe Gen 4 or 5, NVMe, high-speed networking, and advanced power delivery. From GPU-dense builds to storage-heavy nodes, the platform is tailored to ensure balance, efficiency, and future upgradability.

-

Air or liquid cooling options depending on your environment, workload intensity, and form factor.

-

Linux or Windows Server, pre-installed frameworks, and support for containerized or virtualized environments.

Our Process

Every organization has different goals—and we build servers to match them. Whether you're supporting a research lab, powering an AI deployment, or standing up infrastructure for real-time rendering, our process is built around clarity, precision, and performance. We work closely with you to understand your workloads, environment, and future scaling needs, so your system is built to deliver today—and adapt tomorrow.

-

We start with a conversation. What workloads are you running? What software and frameworks do you rely on? Are you deploying in a rackmount cluster, at the edge, or inside a lab? We gather details about your data volume, compute intensity, user concurrency, and physical constraints.

-

Based on your needs, we design a system architecture that aligns with your technical goals and operational environment. That includes choosing the right CPUs or accelerators, balancing memory and I/O, evaluating storage tiers, and ensuring thermal, power, and rack compatibility.

-

We walk you through the proposed build—explaining why each component was chosen and how it will support your goals. If needed, we iterate with you to fine-tune the spec, considering budget, deployment constraints, or upcoming project phases.

-

Once approved, we source and build your server using premium components. Every system undergoes rigorous thermal, stress, and stability testing to ensure reliability under real-world workloads—whether for 24/7 inference, simulation, or high-throughput research.

-

Your server ships fully tested and ready to deploy. Whether you're integrating into an existing cluster or starting from scratch, we support you with documentation, burn-in logs, and optional remote guidance to ensure a smooth handoff.

-

Need to add nodes, change configurations, or re-optimize your setup? We’re here for long-term partnerships. We track technology roadmaps and offer lifecycle planning to ensure your infrastructure evolves as your workload does.

Ready to Build?

Let’s talk about what you’re working on—and how we can power it.

Need help choosing the right configuration? Our team of experts is here to guide you through every step of the process.